@article{Chen_deblurgs2024,

author = {Wenbo, Chen and Ligang, Liu},

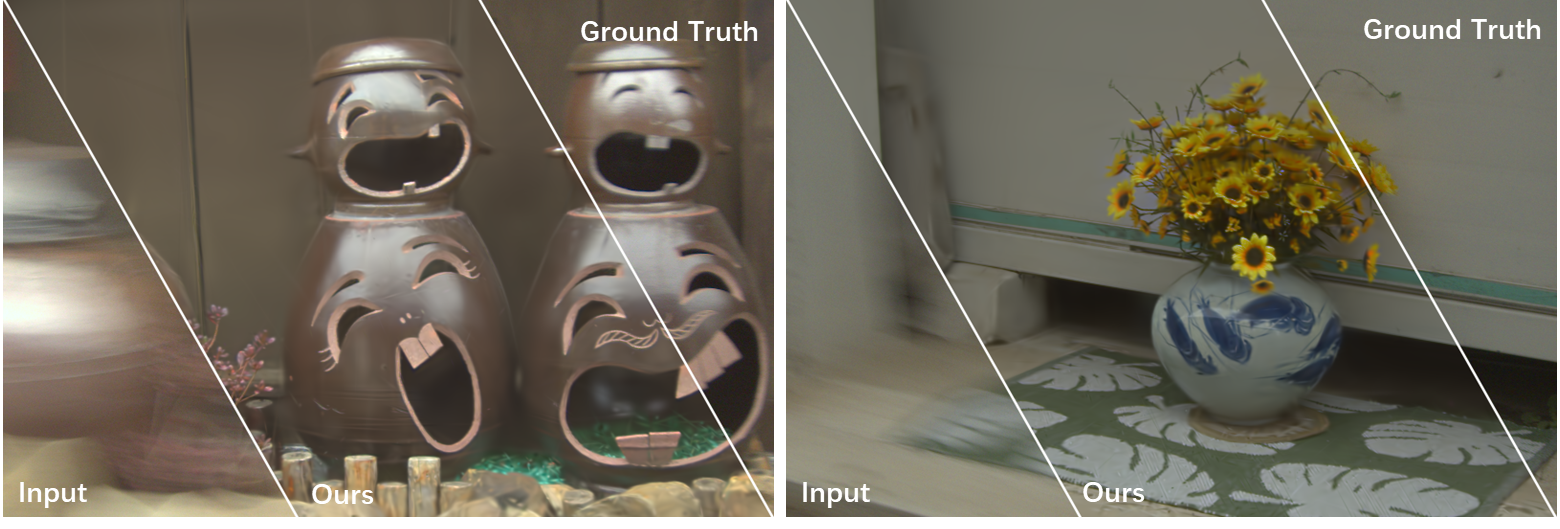

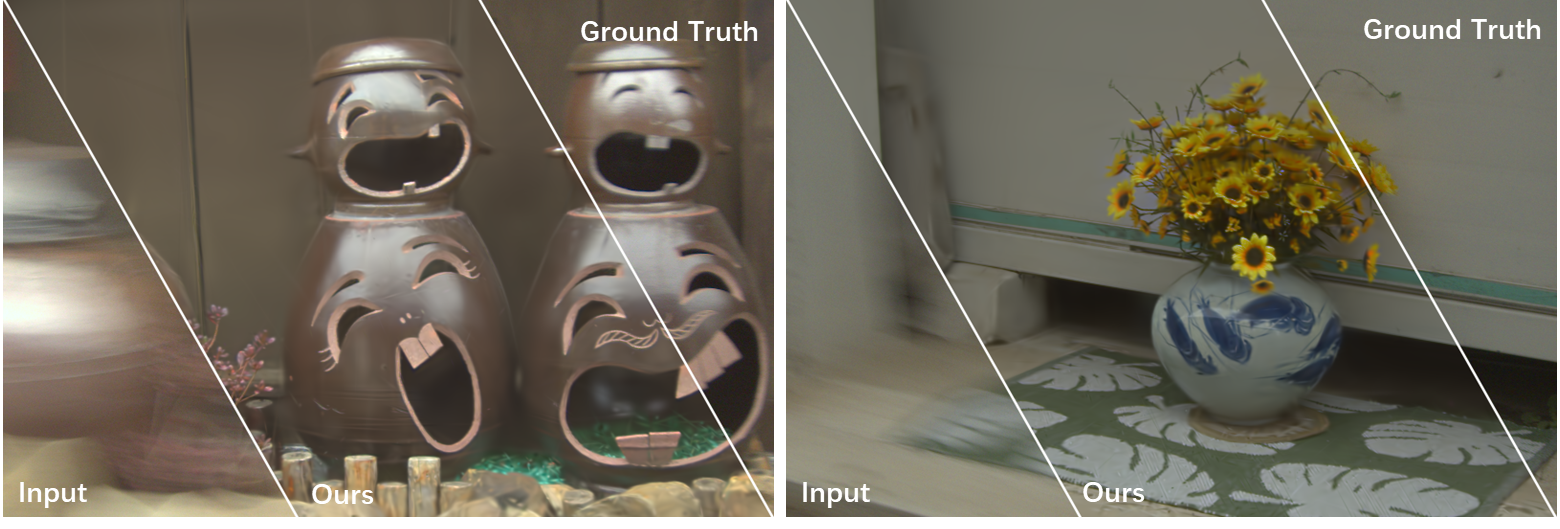

title = {Deblur-GS: 3D Gaussian Splatting from Camera Motion Blurred Images},

journal = {Proc. ACM Comput. Graph. Interact. Tech. (Proceedings of I3D 2024)},

year = {2024},

volume = {7},

number = {1},

numpages = {13},

location = {Philadelphia, PA, USA},

url = {http://doi.acm.org/10.1145/3651301},

doi = {10.1145/3651301},

publisher = {ACM Press},

address = {New York, NY, USA},

}